Why Trust in AI Matters Now More Than Ever

Trust is the essential ingredient in all relationships—personal, professional, and now, increasingly, technological. As artificial intelligence becomes deeply woven into our lives, the challenge we face is not whether to use AI, but how to build trust with it. With digital humans now stepping into roles in customer service, healthcare, and education, our ability to discern and navigate this new reality is more critical than ever.

What Are Digital Humans? Understanding AI-Powered Avatars

Imagine this: you’re chatting with a customer support agent online. A lifelike face greets you warmly, responds instantly, and seems genuinely helpful. But it’s not a person—it’s a digital human. These AI-driven avatars are crafted to mimic real human behavior and interaction. From L’Oréal to T-Mobile to leading hospitals, digital humans are becoming trusted front-line communicators. They provide guidance, training, and even emotional support.

They look real. They sound real. But they’re not. So, how can we tell, and why does it matter?

Why Digital Authenticity Is Becoming Harder to Detect

AI-generated humans and content are becoming nearly indistinguishable from the real thing. These systems draw from massive datasets to emulate tone, expressions, and even body language. While they can be powerful tools, they also raise questions about transparency and authenticity.

Consumers—and leaders—need to know when they’re interacting with AI. Ethical companies should disclose AI use clearly, allowing people to make informed decisions. As these avatars become more prevalent, the line between human and machine continues to blur.

The Core of Human Trust: Emotional and Social Intelligence

Humans build trust through emotional connection, shared experience, and intuition. Our brains are wired for meaning, not just logic. We pick up on nuance, body language, and cultural context—things AI still struggles to authentically replicate. Social and emotional intelligence are central to building strong, resilient relationships. Whether in partnerships or leadership, it’s the human ability to empathize and respond that creates real value. AI can simulate responses, but it doesn’t feel them.

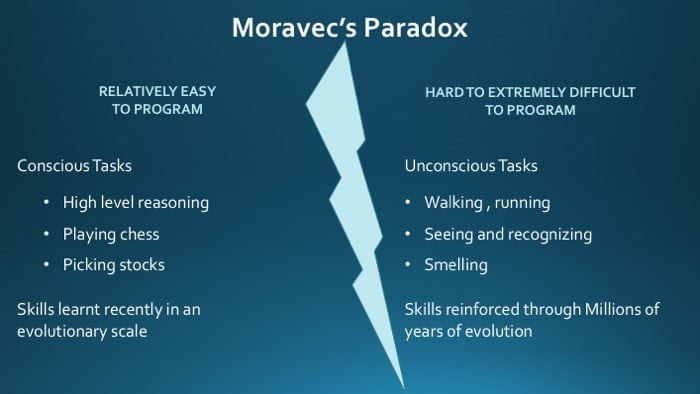

Moravec’s Paradox: Why Simple Human Tasks Are Complex for AI

AI may solve equations and crunch data faster than we ever could, but it struggles with intuitive and sensory processing. It’s precisely those human qualities—empathy, timing, subtlety—that are the hardest for machines to reproduce.

Human Brain vs. Generative AI: Key Differences in Cognition

While generative AI models like ChatGPT with DALL·E3 have made remarkable strides in mimicking human-like outputs, the underlying processes differ fundamentally from human cognition. Let’s break down how human cognition compares to AI systems like ChatGPT and DALL·E:

Human Cognition:

- Causal Reasoning: We understand cause-and-effect, adapting to new or changing situations.

- Emotional Intelligence: We interpret tone, emotions, and intent.

- Contextual Awareness: Our decisions are rooted in culture, experience, and lived reality.

Generative AI:

- Pattern Recognition: AI identifies patterns in massive datasets to produce statistically plausible outputs.

- No True Understanding: AI doesn’t “know” anything; it predicts.

- Limited Contextual Adaptation: While powerful, it can misinterpret subtlety or meaning.

A study in Scientific Reports found that people with higher fluid intelligence were better at identifying AI-generated text, highlighting that human discernment remains critical.

Why AI-Generated Content Feels So Convincing

AI sounds real because it’s designed to mirror how we communicate. These systems are trained to produce fluent, coherent language and imagery that mimics what we already trust.

That’s why AI-generated reviews, deepfakes, and synthetic news can be so difficult to spot. It’s not that they’re malicious by default—they’re just eerily convincing.

Practical Tips: Spotting AI vs. Human Content Here are some quick ways to tell the difference:

Text Clues:

- Overly generic language or emotional flatness

- Repetitive phrasing (e.g., “let’s explore,” “to sum up”)

- Fact-check anything that seems “too perfect”

Image Clues:

- Check for distorted features (like hands or eyes)

- Use forensic tools like TinEye or FotoForensics

- Zoom in—edges and lighting glitches often reveal AI

Digital Human Clues:

- “Too perfect” or slightly robotic timing in responses

- Shallow emotional expression

- Ethical companies will clearly disclose AI use

Partnering for Innovation: Ignite AI at AchieveUnite

At AchieveUnite, we see AI as a practical and thoughtful extension of the trust-building work we’ve been doing for over three decades. Over the past year and a half, I’ve worked closely with our Chief Innovation Officer, Jessica Baker , and our team to develop Ignite AI , the first generative AI platform that applies proven partnering frameworks to modern tools and decision-making.

Ignite AI isn’t meant to replace people, it’s built to support how we think, connect, and lead. It brings consistency, insight, and perspective to how leaders grow trust across teams, partners, and organizations, and take their partnering success to the next level.

Building Trust in an AI-Driven World: What Leaders Must Do To lead in this new era, we must:

- Embrace responsible AI use

- Be transparent about AI integration

- Educate our teams and communities

- Promote human-centered collaboration

We don’t need to fear AI, we need to guide it. With awareness and intention, it becomes a tool for good.

Looking ahead, one thing is clear: the future will be shaped by how we choose to work with AI, not against it, not in place of people, but with intention and responsibility. Trust remains the guidepost. With the right foundation, AI becomes a reliable tool that supports better decisions, stronger partnerships, and greater impact.

Let’s continue to lead with clarity and confidence, making room for innovation without losing the human perspective. Trust will always begin—and end—with humans. As we integrate more digital systems into our daily lives, let’s do it in a way that honors our values, centers empathy, and puts purpose at the heart of progress.

Frequently Asked Questions (FAQs)

What is a digital human in AI?

A digital human is an AI-powered avatar designed to simulate real human interaction through voice, facial expressions, and gestures.

Can AI be trusted in professional settings?

Yes, but only when used ethically and with full transparency. Building trust requires clarity, human oversight, and purpose-driven implementation.

How can I detect if content was written by AI?

Look for repetitive phrasing, emotional flatness, and too-perfect language. Tools like GPTZero or OpenAI’s AI detector can help.

What industries are using digital humans today?

Digital humans are used in customer service, healthcare, education, retail, and entertainment to offer scalable, personalized experiences.

What is AchieveUnite’s Ignite AI, and how does it help?

Ignite AI combines decades of partnership-building frameworks with AI innovation to create a platform that fosters success with integrity and trust.